The AI Learning Curve: Why Devs Get Slower Before They Get Faster

Are you willing to be slower today to be faster next year?

In the most recent episode of Coder’s [DEV]olution podcast, Are We Shipping Code We Don’t Trust? The AI Productivity Paradox, Jason Baum, Project Leader at Selenium, discussed what happens when AI promises speed but delivers friction instead. The conversation raised a critical question that platform engineering teams everywhere are wrestling with: why are experienced developers slowing down with AI tools, and what does that mean for organizations investing in these technologies?

At Coder, we see this pattern daily. Teams adopt AI coding agents expecting immediate velocity gains, only to watch productivity dip in the first few months. The culprit is not the AI itself; it's the infrastructure beneath it. When your development environments can't keep pace with AI experimentation, you can't tell whether the slowdown is the learning curve or your systems breaking under new patterns of use.

The paradox of "faster" tools

Every developer loves a shortcut. So when AI coding tools showed up promising to write less, ship faster, and automate the boring stuff, of course developers leapt at the chance to implement them into their work.

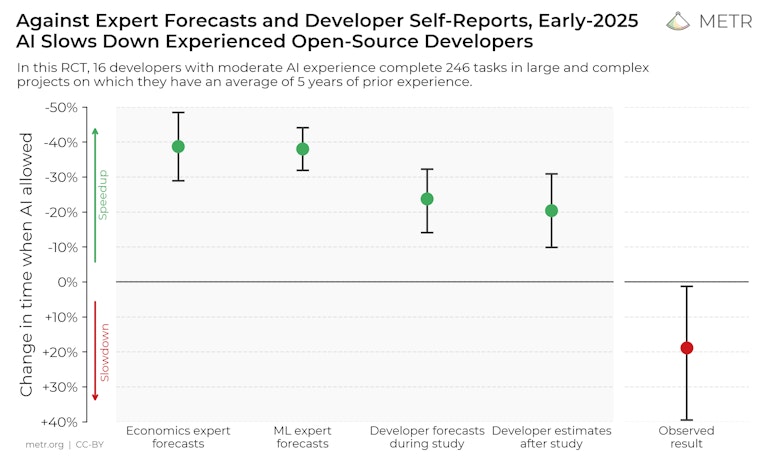

Then the data came in, and it was weird. Studies showed that experienced developers were actually slower when using AI. METR found a 19-percent decrease in speed among senior engineers working on familiar codebases. People got confused. Some got defensive. How could tools built to speed things up end up slowing them down?

Here's the thing though: this happens every time we change how we build software. Version control, automated testing, CI/CD pipelines. All of them made us slower at first. Teams had to pause, learn, deal with the friction. The AI slowdown is just the latest version of that. Developers aren't getting worse; they're relearning how to think. And that always takes longer than anyone wants to admit.

Why the slowdown happens

AI changes the rhythm of how you actually work. You used to be both creator and reviewer. You wrote the code, you caught your own mistakes. Now you're more like a collaborator. You prompt the model, review what it gives you, test it, then decide: keep it, tweak it, or throw it out.

The code shows up instantly, but figuring out if it's actually good is what takes time and where things get messy. Excited developers that were quick to use generative AI coding assistants often found themselves falling flat as they got bogged down in low-quality code or code that seemed fine but ultimately failed in production.

Jason Baum illustrated this on our [DEV]olution podcast in a way that stuck with me: "We're running before we walk with AI."

Developers are still figuring out the pacing. When is the model right? When is it confidently wrong? When has it just completely lost the plot?

Last quarter, I watched a team go through this exact phase. Their first few sprints with AI-assisted tooling felt clumsy. They spent more time debugging than building. But by the third sprint, something clicked. Their reviews got tighter. They started spotting issues faster. The slowdown wasn't failure; it was just what learning looks like when you can actually see it happening.

The trust gap

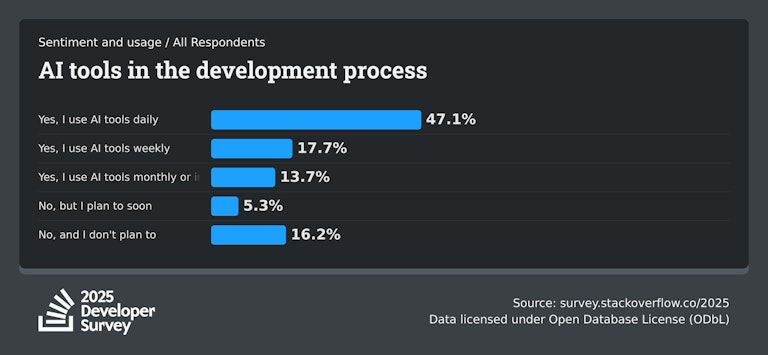

Trust sits at the center of all this. Stack Overflow's 2025 Developer Survey found that 84 percent of devs already use AI tools, but only 33 percent trust their results. That's a massive gap.

And it's not fear of the tech. It's that nobody really knows how it's going to behave. Devs trust things they understand. They trust compilers because they are deterministic and predictable. They don't trust probabilistic models that might give you perfect code one minute and introduce a silent bug the next. And honestly? That skepticism is healthy.

Closing that gap takes repetition, not marketing. Developers need to see patterns of reliability over time. I've seen teams build that trust slowly by running AI-generated code through the same review pipelines they use for everything else. At first, every suggestion got torn apart but soon they were merging small changes without anxiety. The process built trust because it looked like every other engineering habit: test, review, verify, repeat.

The goal isn't blind faith. It's confidence through verification. Unit tests built trust in refactoring the same way, by making the invisible visible.

The long view

Six months from now, this curve starts to flatten. Devs who stick with AI tools will move past the awkward discovery phase into something closer to fluency. They'll learn to frame prompts like they design APIs: clear inputs, defined expectations, repeatable outcomes. They'll figure out which parts of the stack actually benefit from AI and which parts still need a human making the call.

Jason put it well on the podcast: "The best developers aren't the ones who avoid new tools. They're the ones who learn how to question them." It’s a simple line, but it captures the whole point of this phase: learning how to think again before we automate everything.

That mindset is what separates teams that really integrate AI from teams that just play with it. The advantages show up quietly at first: documentation gets better, feedback loops tighten, test coverage improves. But it compounds. Developers who spent hours debugging generated code will shift to designing systems faster with fewer regressions. The ones willing to be "slow" now will be the fastest later.

Redefining "productivity"

We've measured productivity in software the same way for decades: lines of code, commits, tickets closed. AI breaks that completely. When a model can spit out hundreds of lines in seconds, output stops meaning anything. What actually matters is how fast you can go from idea to a reliable, maintainable solution that needs understanding, not volume.

The slowdown happening right now is that transition. Devs are putting in time upfront to use AI responsibly so they can move faster later without breaking things. The metric that'll matter going forward is probably something like cognitive velocity: how fast teams can understand problems, evaluate solutions, and deliver stable results.

It's slow at first because comprehension takes time. But once that becomes muscle memory, the multiplier effect is going to be huge.

Why infrastructure is an AI enabler

At Coder, this is the shift we're built for. Organizations adopting AI need infrastructure that supports exploration without risking production. Self-hosted cloud development environments give developers and AI agents the same consistent, secure workspace every time.

Jason called this out on the podcast: "When companies start giving AI agents real access to environments they don't fully control, they're opening the door to chaos. It's not just a productivity issue. It's a security one."

He's right. The risk isn't just that AI slows you down, it's that inconsistent infrastructure makes it impossible to diagnose issues, trust results, or maintain security posture. When AI agents start autonomously generating and executing code, you need environments you can govern completely.

This is why consistent, production-like workspaces matter more than ever. They provide a predictable foundation that fosters faster, safer learning. The goal is twofold: speed and stability. You need to build trust in new workflows while maintaining control over what's running where, who has access, and what data is exposed.

Coder provides exactly this: reproducible ephemeral environments that developers and AI agents can spin up in seconds, all running on infrastructure you control. Whether you're in financial services managing compliance requirements or a tech company scaling AI adoption across hundreds of developers, you get the security of self-hosted infrastructure with the speed of cloud-native tooling. The result is every workspace used by both human developers or AI agents starts from the same known-good state. No configuration drift. No "works on my machine" surprises. That consistency is what makes AI experimentation possible without the chaos.

The real lesson

Every big shift in software development starts with discomfort. The tools promise speed, but using them the first time feels like walking through mud. The devs who push through, who are willing to be patient while they figure this out, end up faster, better, more confident on the other side.

AI isn't killing developer productivity. It's redefining what productivity even means. The slowdown isn't a problem; it's the investment you make to actually get somewhere.

What surprised me most in all these conversations? It's not that AI makes developers slower. It's that the best teams are choosing to be slower right now. They're deliberately taking time to build the right habits, even when it feels inefficient. That kind of patience is rare in an industry that treats velocity like a religion.

So, I’ll ask again: Are you willing to be slower today to be faster next year?

Because that's the actual trade-off, and I'm not convinced everyone realizes they're making it.

Listen to the full conversation

Want to hear more about why AI tools create friction before they deliver value and what this shift means for software development? Tune in to the[DEV]olution podcast episode with Selenium’s Jason Baum, who knows more about developer productivity and testing than most people forget, to dig into all of this.

You can find the full episode on YouTube, Spotify, or Apple Podcasts.

Subscribe to our newsletter

Want to stay up to date on all things Coder? Subscribe to our monthly newsletter and be the first to know when we release new things!