Controlling Remote Development Costs on Public Clouds

One thing I enjoy about interacting with prospects and customers - is listening to what really matters to them.

One item they consistently ask Coder about, is how to control their on-premises or more importantly their public cloud computing costs.

When you move development environments off of local machines like laptops to server-based infrastructure, the computing cost required to power IDEs and development environment tasks like builds, can quickly add up in your public cloud infrastructure.

Always on means always billing

The ease at which developers can spin up cloud resources like Kubernetes pods, containers and VMs keeps developers productive. Public clouds charge for what you use, so unless you remember to stop resources, you and your organization will be billed for usage even if the computing resources are idle.

Public cloud providers are also starting to offer development environment solutions which are basically VMs with some 3rd party IDE integration to drive cloud compute consumption. Examples include Amazon CodeCatalyst, GitHub Codespaces, Microsoft Dev Box, or Google Cloud Workstations. The computing options are limited which can lead to a sub-optimal and expensive experience where you have to use the larger instance type to get more storage but do not need more CPU cores or memory. At the end of the day, there is no financial incentive to provide controls to limit compute costs in these public cloud solutions.

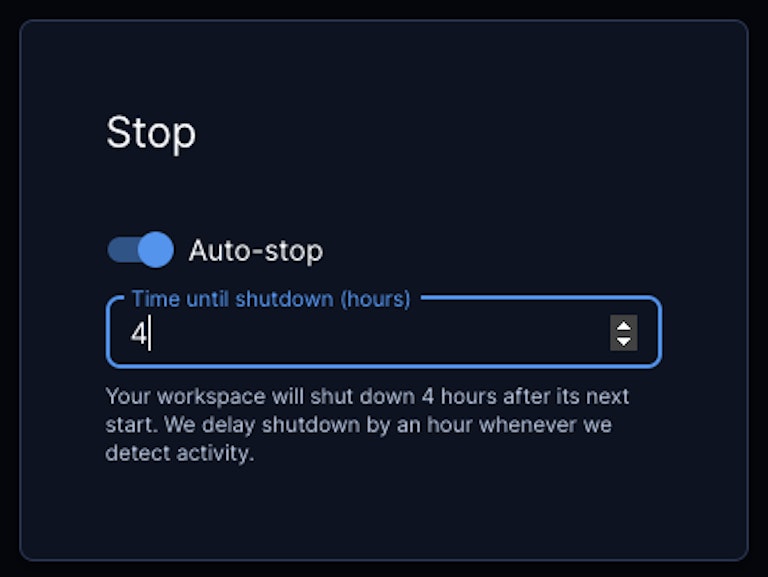

Auto-stop development environments

In Coder OSS, we provide controls for developers to auto-stop their development environments after a predefined amount of time. If a developer is actively using the workspace e.g., an SSH connection is active with a locally-installed IDE, a browser-based IDE or a web terminal, Coder will not stop the development environment (and interrupt the developer's flow) and check in another hour for inactivity.

The auto-stop feature is very important when deploying development environments as VMs which have dedicated compute for each developer and therefore are more expensive than containers that run in a shared infrastructure like Kubernetes.

Sizing the remote development platform

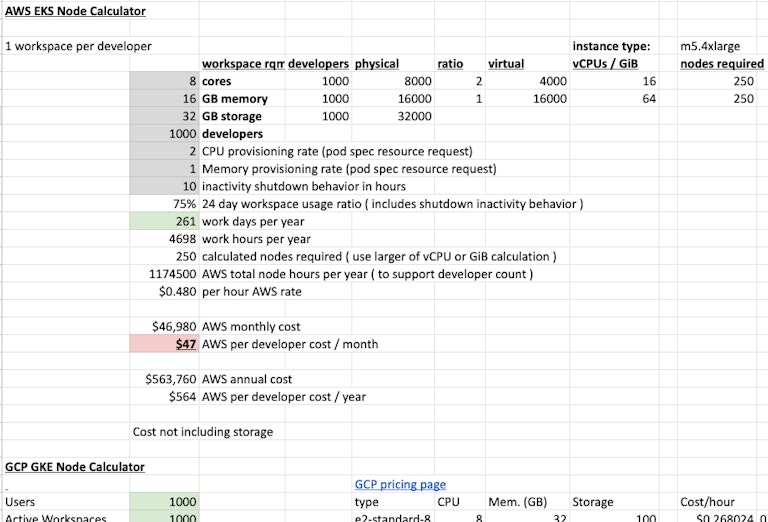

During evaluations of Coder, DevOps leaders often ask Coder for advice on how to size their Kubernetes infrastructure. The answer is "it depends" since key input variables are the compute required for an average development environment and the number of users and development environments anticipated to perform remote development.

Once you know these input variables, you model out with different machine types e.g., an AWS m5.4xlarge VM with 16 vCPUs and 64 GiB, to determine the number of Kubernetes nodes (i.e., VMs) required to support the Coder control plane and development environment computing. Development environment storage needs to be accounted for, but tends to be the shortest leg of the stool compared to CPU and memory. There is an external PostgreSQL database to store state in Coder, so that has to be sized as well based on user and load volume.

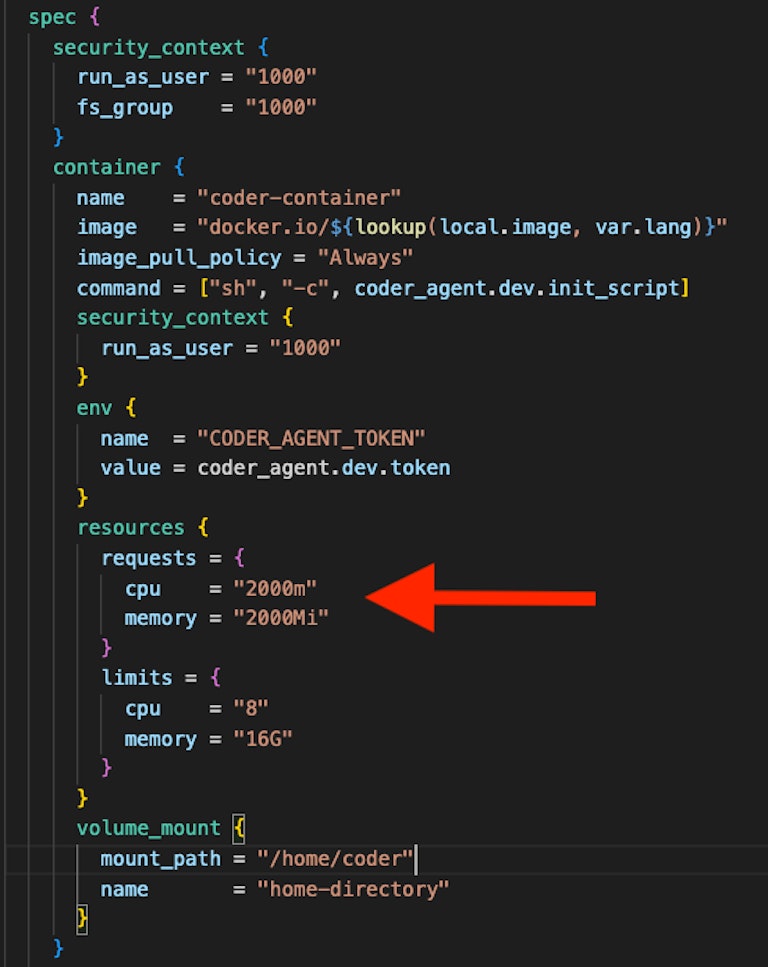

Resource Requests and Limits

Additional input variables like development environment auto-stop settings and Kubernetes pod resource requests impact the Kubernetes node sizing.

For example, a developer may think they are getting 8 CPU cores and 16 GiB of memory, but adjusting the resource requests in a workspace Terraform-based template's pod spec actually gives less initial compute to the developer, thus saving you cloud cost.

Lower resource requests means less Kubernetes nodes are required to meet the development environments needs since developers typically spend the majority of their work day in the editor of the IDE typing code which has lower compute resource needs. If developers need more compute, e.g., a Bazel or Maven build or starting a Machine Learning model training job, the development environment will burst to the CPU cores and memory limits originally specified.

Autoscaling Nodes

Kubernetes will also automatically scale up more nodes to accommodate new development environment creation if existing development environments exhaust the node's compute. Conversely, as the work day ends, Kubernetes automatically scales down the nodes since development environments are being manually or automatically stopped with the aforementioned auto-off feature.

DevOps can apply labels to development environments running as Kubernetes pods and leverage Coder's audit log API to build their dashboards to trend which groups and users are consuming the most cloud compute for change back purposes.

Workspace Quotas

Within templates, DevOps and administrators can specify workspace resource costs in denominations of your choice. e.g., a VM template is 75 units, a Kubernetes pod template is 25 units. At the Group-level, quota allowances are defined. e.g., 100 units. When developers create workspaces based on templates, we check if these quotas have been exceeded and prevent additional workspace creation if exceeded. e.g., If a developer in a group with a 100 units budget, already has 1 VM (75 units) and 1 Kubernetes workspace (25 units), and attempted to create a second Kubernetes workspace, they will be blocked since 125 units is greater than their allowed 100 units.

Next Steps

If you are an enterprise interested in self-hosting a remote development platform, please contact Sales or request a demo. Otherwise if you are technical, try out Coder OSS on your local machine or your server infrastructure. You can also start a trial of the Enterprise version.

Subscribe to our newsletter

Want to stay up to date on all things Coder? Subscribe to our monthly newsletter and be the first to know when we release new things!