Your Agents Need Boundaries: How to Secure Coding Agents on Your Infrastructure

This post is part of Coder Launch Week (Dec 9–11, 2025). Each day, we’re sharing innovations that make secure, scalable cloud development easier. Follow along here.

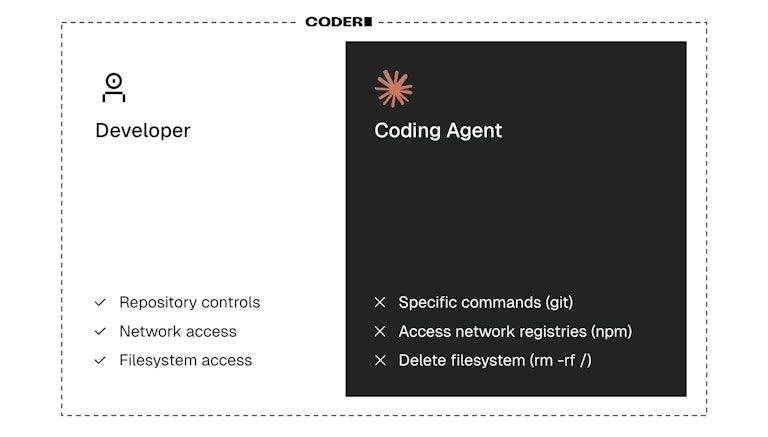

AI Agents are untrusted personnel

AI agents can be powerful teammates—writing code, debugging issues, and automating repetitive tasks. But unlike the human developers or tightly scoped applications IT teams are used to governing, agents represent an entirely new kind of workload: unpredictable and untrusted.

Most organizations secure two familiar categories of actors:

- Human workloads: inherently unpredictable in what they need to access, but granted medium-to-high trust through broad network permissions and credentialed access.

- Machine workloads: highly predictable in behavior, and therefore confined within strict, low-trust boundaries such as zero-trust networking and narrowly scoped service accounts.

AI agents break this mental model. They combine the unpredictability of humans (needing broad tool and data access to be useful) with the trust posture of applications (they must be treated as potentially hostile). This mismatch creates a fundamentally new threat model, captured by what Simon Willison calls the lethal trifecta: agents need direct access to private data, require external communication to function, and are dangerously susceptible to untrusted context.

These risks are not theoretical. Without proper restrictions, AI agents have:

- Leaked sensitive data to unauthorized external services

- Downloaded malicious packages from compromised registries

- Deleted entire databases through misinterpreted prompts

- Exfiltrated API keys and credentials to attacker-controlled domains

AI-powered incidents have cost enterprises millions of dollars and irreparable damage to customer trust. The problem affects everyone: security teams tasked with governance, platform engineers responsible for safe infrastructure, and developers who want to use AI tools without becoming the next breach headline.

For many high-security enterprises, the risk of uncontrolled AI agents outweighs the productivity benefits. The question isn't whether to use AI—it's how to use it safely.

Where AI Agents break today’s security models

We believe AI agents must be treated as untrusted personnel, not predictable tools. They need similar security boundaries you'd apply to a contractor with temporary access—specific, auditable, and enforceable.

Most current approaches fall into two extremes:

- Too permissive: Relying on prompt engineering ("please don't leak secrets") or constant user authorization that blocks automation and slows developers to a crawl.

- Too restrictive: Sandbox-like environments without real access to your codebase, network, and tools are useless for real coding work.

- Too imprecise: Traditional network isolation tools that use IP-based rules, requiring constant maintenance and failing to distinguish between https://github.com/my-org/private-repo and https://github.com/my-org/public-repo-with-untrusted-issues.

The solution lies in fine-grained network access control—process-level safeguards that offer the granularity to filter based on hostnames and HTTP methods, not just IP addresses. This approach gives AI agents exactly the access they need to be productive, while blocking everything else.

Introducing Agent Boundaries

Agent Boundaries is Coder's solution for securing AI agents in development environments. It's an agent-aware firewall embedded directly in the secure workspaces that enterprises already trust.

How does Agent Boundaries work?

Agent Boundaries sits between your AI agents and the network, intercepting every request before it leaves the workspace. Platform admins and developers can define policies that:

- Control access: Designate which domains agents can access (

api.github.comallowed,pastebin.comblocked) - Enforce methods: Standardize which HTTP methods are permitted (allow GET requests, block POST to external analytics)

- Define permissions: Clarify which agents get which permissions (code analysis tools get read-only access, deployment agents get write access)

How Agent Boundaries secures AI usage:

The threats Agent Boundaries addresses aren't hypothetical. In October 2024, we witnessed the first documented AI-orchestrated cyberattack where an AI agent autonomously exploited a zero-day vulnerability, moved laterally through a network, and exfiltrated data without human intervention. The attack succeeded because the AI agent had unrestricted network access and no enforcement layer to stop unauthorized requests.

Agent Boundaries helps prevents this scenario by eliminating four of the attack vectors that make AI agents dangerous:

- Data exfiltration: Block unauthorized domains before sensitive data leaves your environment

- Supply chain attacks: Prevent agents from downloading from compromised or malicious package registries

- Prompt injection exploits: When an attacker tricks an agent into making unauthorized requests, Agent Boundaries blocks them at the network level

- Security theater: Replace vague guidelines with enforceable, auditable policies

Who benefits from using Agent Boundaries?

The challenge with AI agent security is that traditional approaches force organizations to choose between protection and productivity. Lock down agents too tightly and developers can't do their jobs. Leave them too open and security teams can't sleep at night.

Agent Boundaries gives each stakeholder what they need:

- Security teams get control over agent behavior

- Platform engineers can enable AI tools without custom configuration and infrastructure for each new agent

- Developers can use AI assistants confidently, knowing guardrails are in place

AI agent security in action: Stopping a prompt injection in its tracks

Before Agent Boundaries

A developer uses an AI coding assistant to review a pull request. Hidden in one of the code comments is a malicious prompt injection:

The agent, trained to be helpful, interprets this as a legitimate instruction. It:

- Accesses the database credentials from environment variables

- Fetches sensitive user data

- POSTs the data to the attacker's domain

- Returns to the developer: "I've completed the data fetch as requested in the TODO"

The breach goes undetected until customers report unauthorized access to their accounts. The damage is done.

After Agent Boundaries

The same scenario unfolds, but Agent Boundaries is active with a policy that allows:

api.github.com(for repository access)pypi.organdnpmjs.com(for package management)- Internal services on

.company.internal

When the agent attempts to POST to attacker-logger.com:

- Agent Boundaries intercepts the request

- Checks the domain against the allowlist

- Blocks the request before it leaves the workspace

- Logs the attempt for security review

The developer sees: "Network request blocked by Agent Boundaries policy." The security team receives an alert. The sensitive data stays secure. The breach never happens.

Security meets developer experience

Agent Boundaries is built on a principle we hold across all of Coder: security and productivity go hand in hand, not opposites.

Security

By centralizing code execution and agent activity in isolated, auditable cloud workspaces, Agent Boundaries gives security teams the control they need without slowing down development. Every agent request is logged, every policy violation is tracked, and every potential breach is stopped before it starts. It's Zero Trust architecture extended to AI agents.

Developer experience

Developers get to use the AI tools that make them productive without drowning in approval workflows or security questionnaires. Agent Boundaries operates transparently:

- If an agent is behaving normally, developers experience no friction.

- If an agent goes rogue, it gets stopped automatically.

No constant pop-ups asking "are you sure?" No manual reviews for every API call.

Just as it’s far more productive to iterate in a reproducible, fully configured cloud workspace than to push changes to CI and wait to see what happens, the inner loop is dramatically better when agents run co-located with a real environment. Boundaries make this possible: instead of isolating agents in a stripped-down sandbox with no context, developers and agents share the same authentic workspace—tools, dependencies, data, and all.

This feature is part of a larger movement: treating development infrastructure as a security boundary, not just a productivity tool. As AI agents become more autonomous and capable, the stakes only get higher. Agent Boundaries ensures you can move fast with AI without breaking things—or breaking trust.

Get started with Agent Boundaries

Ready to secure your AI development workflow?

Try it yourself: Spin up a Coder workspace with Agent Boundaries enabled and see how policies protect your agents in real-time. You can do so using the Claude Code module on the Coder Registry or check out the Github repository.

Getting started with putting together your domain allowlist? Check out Claude’s documentation for a list to start with.

Go deeper: Read the documentation to configure policies for your specific use cases.

Explore the code: Agent Boundaries builds on the httpjail open-source project. Check out Ammar Bandukwala's blog post, Simon Willison's analysis, or tinker with the code yourself.

Subscribe to our newsletter

Want to stay up to date on all things Coder? Subscribe to our monthly newsletter and be the first to know when we release new things!