Simplify data science environments with Coder

In "Creating a solid Data Science development environment," Gabriel dos Santos Gonçalves describes the joy he and other data scientists feel as they dive into a new data set. Too often, though, the revelry is broken by the need to attend to what Gonçalves calls the “not so fun” part of his job—setting up his workstation with all the apps and libraries necessary for the project. “In general,” he writes, “we Data Scientists tend to focus more on the results (a model, a visualization, etc) than the process itself, meaning that we don’t pay enough attention to documentation and versioning as much as a software engineer.”

He then goes on to lay out the steps he recommends for setting up a reproducible development environment that provides the languages, dependencies, and tooling necessary for his projects—the drudgery that data scientists must do before getting to the fun stuff.

In this post, I’ll show you how Coder saves data scientists from that drudgery by allowing them to create fully configured workspaces with the click of a button. Coder also solves a number of other problems that data scientists face on a daily basis such as the need for compute power, protecting data sets, and data latency.

Spend time analyzing data, not configuring environments

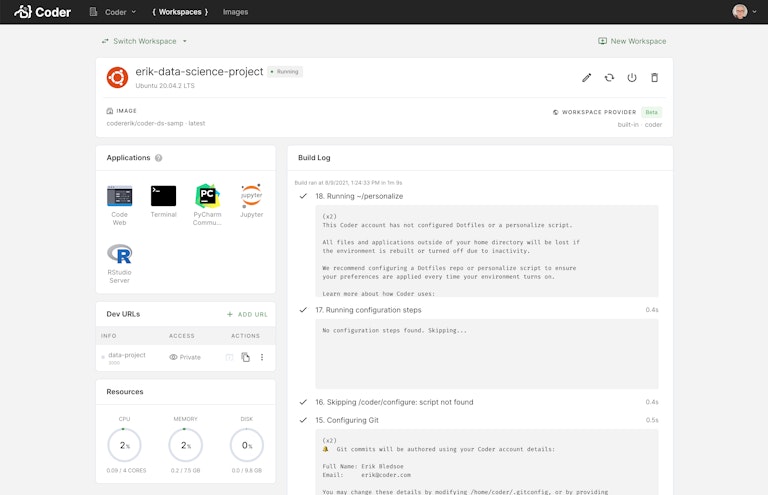

Coder centralizes the creation and orchestration of workspaces for development in a secure and repeatable way using Infrastructure as Code. With Dockerfiles and Coder workspace templates, teams can prepare a development environment once. The result is a reproducible development environment that provides the languages, dependencies, and tooling necessary for a project and that can be used by any data scientist on the team with permissions.

To get started on a project, a data scientist only has to select a workspace and start analyzing the data, avoiding all of the “not so fun” stuff that goes along with setting up and configuring the environment.

If a team member is working on the same project, they can launch a workspace from the same image and be certain that an analysis that runs in one environment will produce the same results on both workspaces. No more, “well, it works on my machine.”

Context switching made easy

Creating workspaces from Docker images also makes it much easier to move quickly from one project to another. Spinning up a workspace to help out with a new project takes minutes. Context switching between projects shouldn’t take days.

Coder supports the tools data scientists use

Coder has built-in support for the IDEs most preferred by data scientists, including RStudio, PyCharm, Jupyter, and VS Code. While Jupyter is by far the most commonly used IDE for data exploration, data scientists commonly switch between IDEs depending upon whether they are exploring a data set or implementing a model to be used by the business. Coder supports this workflow by supporting multiple IDEs accessible through the browser or SSH.

As I’ll demonstrate below, because Coder workspaces are created from Dockerfiles, those images can be pre-configured with any of the required IDEs, the precise version of Python required for a project, as well as all of the libraries typically used such as NumPy, Pandas, Keras, PyTorch, TensorFlow, and others. The data scientist should never have to worry about whether they remembered to install a needed library or conflicts between versions.

Coder also integrates with the major version control system providers (VCS)—including GitHub, GitLab, and Bitbucket Server—allowing data scientists to colloborate on code as well as data.

Data platform agnostic

Coder doesn’t care where your data is stored. Whether you use AWS, Google Cloud, Databricks, Snowflake, an on-site data warehouse, or some combination of all of the above, if you can access your data you can do so through your Coder workspace.

Whether we are talking about IDEs, VCS, data platforms, or cloud providers, our goal at Coder is to integrate with the tools and services you already use, not to lock you into a particular toolset.

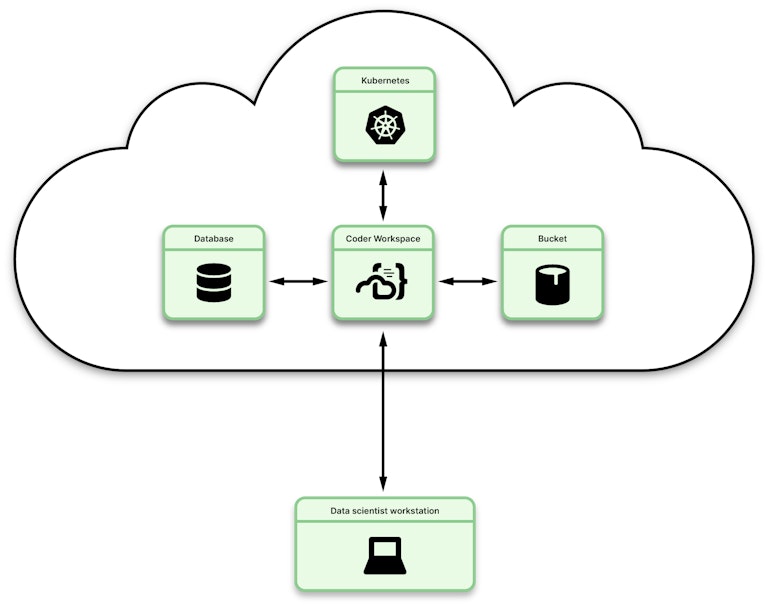

Use the power of the cloud to crunch large data sets

Analyzing millions and millions of data points to train your deep learning model takes a lot of compute power and memory. With Coder workspaces, that compute power and memory is provided by the Kubernetes cluster, not the scientist’s local machine. In addition, workspaces can be configured to automatically draw upon the cluster’s unused memory and CPUs, adding additional power during periods of high need.

Coder workspaces can also be configured with a Graphics Processing Unit (GPU), allowing them to harness the massive parallelism of the GPU.

Drawing upon the power and scale of the cloud significantly reduces the time needed to analyze massive data sets. It also frees up the data scientist’s personal machine, allowing them to work on other tasks while the cloud workspace churns away. They could even spin up a new workspace and start working on a separate project while the analysis is running. Try doing that with even the beefiest workstation.

Protect intellectual property and customer data

Data breaches present a real and present danger to most companies, and the situation is only getting worse. 2020 was the worst year on record in terms of the total number of records exposed, and it broke the previous record before the end of the second quarter. The annual Data Breach Investigations Report identified over 5,200 confirmed data breaches in 2021. While most data breaches are the result of outside malicious agents and/or human error, the number of threats from malicious insiders increased 47% between 2018 and 2020.

From a security perspective, there are few good reasons to allow sensitive data and intellectual property to reside on an individual’s device.

Yet, training and running machine learning models require data, often sensitive data like customer data.

With Coder, this data remains isolated and secure in workspaces residing on the cluster, not sitting on data scientists’ personal devices, thereby reducing the risk of both accidental and malicious data leakage.

Decreased data latency

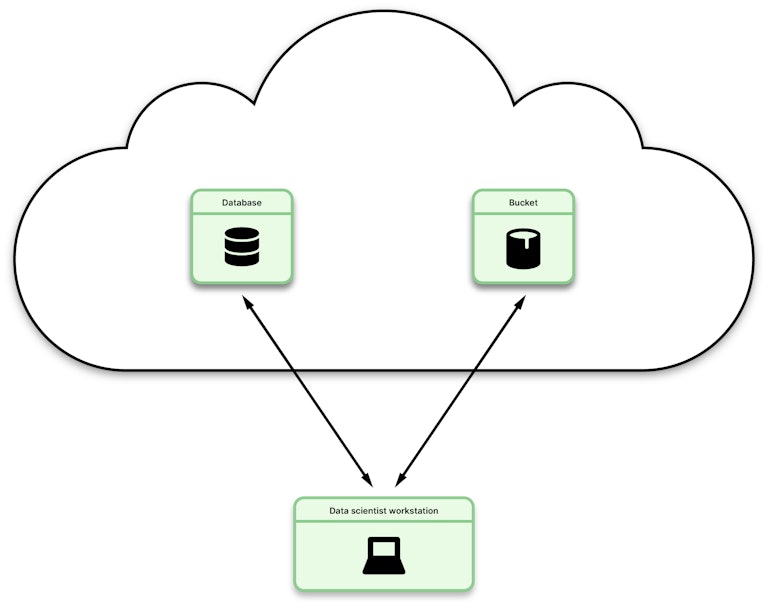

In addition to the security benefits of not downloading data to local machines, there are also significant efficiencies to be had.

Data scientists frequently work with large data sets that are stored in the organization’s cloud, either in buckets or databases. Downloading data or even returning query results to the user’s local machine requires the data to traverse the distance between the cloud provider’s servers and the organization’s network (or even the scientist’s home network).

Normal desktop to cloud latency is about 100ms. Because Coder workspaces are located in the cloud alongside the data sources this latency is essentially zero. One customer reported to us that a database query that used to take forty seconds to execute when run locally, took less than one second when executed from a Coder workspace!

Obviously, results will vary depending on a variety of factors, but in general, users should expect significantly less data latency when using Coder.

Reduce configuration drift and model deployment issues

Not all analytical models are intended to be deployed to a production environment, but those that are headed to production depend upon a high degree of environmental consistency as they move from development to testing to production. Configuration drift occurs when there is any unplanned variance between a system's expected or recorded state and its actual state. Drift can occur at any point in the pipeline and is the number one cause of failed deployments.

Coder reduces the likelihood of configuration drift by keeping development environments consistent with other environments in the pipeline. Coder encourages the practice of rolling out a new image with new dependencies instead of depending on an engineering manager telling everyone to "make sure to upgrade to Python 3.9.5." When the production environment changes, all that is required to keep the development environments in line is an update to the workspace Docker image. These changes can then be pushed out to all workspaces created from that image — configuration drift averted for the entire team.

Building a Coder workspace for data science

Here is a sample Dockerfile that could be used to build an image that could serve a the foundation for Coder workspaces for data science. It installs Jupyter, PyCharm, RStudio, and code-server for running VS Code. It also includes Python and a variety of commonly used libraries. The original file is available in my repository or you can pull it from Docker Hub

Conclusion

People typically go into data science because they like solving problems with data, not because they enjoy installing Python library dependencies. Companies hire data scientists for the business insights they provide with their analyses. Coder lets data scientists spend their time doing what they enjoy and what the business finds valuable.

To learn more about how Coder can support your company’s data analysis efforts, schedule a demo or sign up for a sixty-day free trial.

Subscribe to our newsletter

Want to stay up to date on all things Coder? Subscribe to our monthly newsletter and be the first to know when we release new things!