Inside the Stack: Secure and Scale AI Coding Agents with Coder

Platform teams across industries are exploring how to expand their adoption of AI coding agents, with or without a human in the loop. But we often see the momentum slows down when governance, security, and compliance become part of the equation. We’ve learned from our customers that the difference between a stalled pilot and production-ready AI development comes down to infrastructure.

Coder helps teams address these challenges and unblock AI tools in their workflows. Our customers deploy Coder for a consistent and well-governed enterprise development platform that supports both human and AI agent workflows.

The following technology stack offers a blueprint for deploying AI coding agents securely and effectively in enterprise environments for various industries and use cases. This is how some of the world’s leading teams are running AI agents in production today.

Essential concepts

Before we walk through the Coder AI stack, here are the core components that make it work:

- Templates are written in Terraform and define the underlying infrastructure that all Coder workspaces run on. Admins create these templates to ensure consistent, reproducible environments for developers and AI agents. Templates may consist of one or multiple files that represent the infrastructure hosting Coder workspaces. It all starts with the templates!

- A Workspace is the development environment where developers or AI agents work inside of. They are provisioned using Terraform, inside of the customer infrastructure. Each Workspace will have one associated user.

- Coder Tasks is an interface that allows you to run and manage coding agents like Claude Code and Amazon Q within a given Workspace. A developer can jump in and steer the agent as required.

- Agent Boundaries enable admins to encapsulate agents like Claude Code to run within a separate firewall and add process isolation, preventing agents from accessing resources they are not allowed to.

- AI Bridge acts as a gateway between your users' coding agents or IDEs and LLM providers. By intercepting all AI traffic between these clients and the upstream APIs, AI Bridge can record user prompts, token usage, and tool invocations.

These building blocks are designed to work as a unified system where infrastructure-as-code definitions flow into governed execution environments and AI interactions pass through centralized security controls. The result is an architecture where platform teams maintain control while developers and AI agents maintain velocity.

Coder's blueprint for running AI agents

To developers and AI agents, all that matters is that they have consistent environments that just work with all the tools they need. No setup friction. No security exceptions. No wondering if the agent can access what it needs or if it's violating policy.

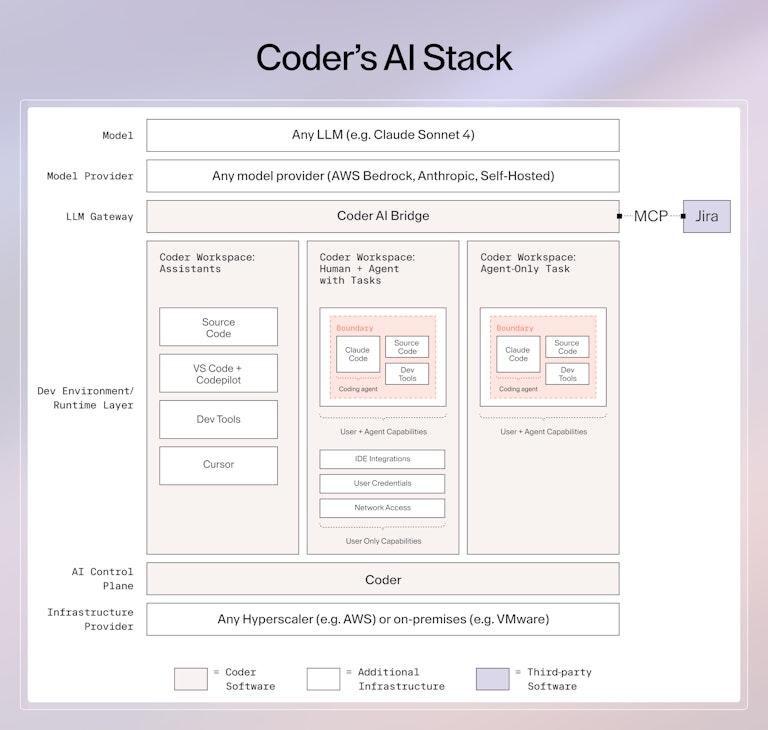

Coder is cloud, IDE, and agent agnostic which means admins can pick and choose tools that their users like and create standardized templates for faster onboarding. The key components for Coder’s AI-native development platform are illustrated in the diagram below:

The AI stack that makes it possible

Six layers work together to deliver security, consistency, and scale. We'll walk through them bottom-up, starting with the foundation and building toward where work actually happens.

Infrastructure layer: Deployment flexibility

This is the foundation where the hardware lives, representing any public cloud (AWS, GCP, Azure) or private infrastructure (with VMware, etc). Coder is self-hosted, which means, you can deploy it on any hyperscaler, on-prem, or even in air-gapped datacenters to adhere with potential regulatory requirements. All deployment models can be provisioned with availability, reliability and scale in mind.

Since Coder is configured inside your own infrastructure, it inherits all the access and security policies you define. On top of the infrastructure, you can choose to run Kubernetes, VMs on EC2, OpenStack, VMware and so on. This abstracts and manages the compute layer Coder runs on so teams don’t need to manage raw infrastructure and can standardize how development environments are provisioned. Most commonly, we see teams deploying Kubernetes clusters for cloud-native workloads and AWS EC2 for monorepo-heavy development environments.

The next three layers are part of Coder software: an AI control plane, a development environment, and an LLM gateway.

AI Control Plane: The Orchestration layer

Coder’s AI control plane serves as the central coordinator to manage Workspace lifecycles, user authentication, agent permissions, Workspace configuration, and external integrations. Both UI & API are supported for template creation and hosting, provisioning, Role-Based Access Control (RBAC), governance, and lifecycle management.

Coder separates who defines environments from who uses them. Admins create and manage Templates while developers use those Templates to launch Workspaces. Templates are responsible for creating and running AI agents within Workspaces. This separation ensures consistency, repeatability, and governance at scale.

Development Environment: Where governance meets execution

This is the most critical part of the stack. Here, Workspace infrastructure spans the resources that AI tools and developers use to read, write, and execute code with pre-configured tools, boundaries, policies, and governance during runtime. Every Workspace is configured via infrastructure as code, so you can track changes, replicate setups, and keep everything consistent across your fleet.

Agent Boundaries provide network-level enforcement to block domains, subnets, or HTTP verbs and prevent exfiltration inside of your Coder Workspace, ensuring that an AI agent does not operate with the same level of privileges as its human counterpart.

Admins can select any AI tools to run on Coder, whether it’s AI coding assistants like Cursor or Copilot that help developers write, refactor, and document code in real time, or AI coding agents like Claude Code or Codex that autonomously complete scoped tasks such as writing, testing, and committing code changes without step-by-step human guidance.

You can run AI coding agents alone, with users, or just the code assistants depending on the task in point. For example, a team triaging a complex bug may assign initial investigation to Claude Code within a Coder workspace, which analyzes logs, traces the issue, and proposes potential fixes. The developer then jumps into their preferred IDE within the same workspace to review the analysis and complete the implementation.

Both developer and agent have access to source code and tools, but only the developer can access integrations, user credentials, and network access. Agents can operate on code and tooling but can't impersonate users, access credentials, or reach external networks unless explicitly allowed.

Common Coder Workspaces configurations include:

Coding Assistants: These are the most widely adopted form of AI today and often serve as a gateway to more advanced AI coding agents. Coding assistants are great for in-line suggestions, autocompletions, and code explanations and can run inside Coder Workspaces. Coder integrates with agentic IDEs such as Cursor, Windsurf, and Zed to work alongside developers. Template admins can pre-install extensions for coding assistants such as GitHub Copilot and Roo Code. Running these assistants inside Coder workspaces rather than locally provides faster onboarding with out-of-the-box setup, standardized environments, and security through isolated environments. Common use cases include small refactors, generating unit tests, writing inline documentation, and code search and navigation.

Human + Agent with Coder Tasks: Best for collaborative development, where a human and an AI agent (e.g., Claude Code) share the same Coder Workspace. Coder provides fully-capable Workspaces to switch to an IDE and take issues to completion for cases where humans pair-program with agents. Developers delegate research and initial implementation to AI, then take over in their preferred IDE to complete the work. The difference is the access level, as in, both developer and their AI agent will have access to source code and tools while developer only would be able to access integrations, user credentials and network access. This ensures that agents can operate on code and tooling, but can’t impersonate users, access credentials, or reach external networks unless explicitly allowed. Common use cases include bug triage and analysis, exploring technical approaches, understanding legacy code, and creating starter implementations.

Agent-only Task: Great for AI agents that run independently without user interaction for extended periods of time. Coder Tasks provides isolated, context-based Workspaces with source code, dev tools and services allowing for asynchronous tasks execution. The workspace shell is not exposed externally and is used for managing agent execution such as job orchestration or monitoring. Tasks become available when the template for a workspace has the coder_ai_task resource and coder_parameter named AI Prompt defined in its source code. In other words, any existing template can become a Task template by adding in that resource and parameter. Common use cases include automated code reviews, scheduled data processing, continuous integration tasks, and monitoring and alerting.

LLM Gateway: Secure model access

Coder AI Bridge is an LLM gateway that interfaces model calls and manages centralized access to MCP servers (e.g., Jira, GitHub) for AI agents and assistants. AI Bridge sits between developers and LLM providers, monitoring AI traffic between these clients and the upstream APIs to record user prompts, token usage, and tool invocations.

AI Bridge centralizes logging of prompts and tool calls, leverages existing identity/RBAC, and supports both centrally configured and local tools. This enables teams to deliver AI development tools responsibly and at scale while ensuring compliance, cost controls, and security policies are enforced. AI Bridge sits between developers and LLM providers, enabling rapid, enterprise-scale adoption of AI tools with built-in governance.

Model Provider

A model provider standardizes access to different AI models, however you can also self-host your own. Common model providers would be the likes of AWS Bedrock, Anthropic, and Open AI. Model providers integrate with AI Bridge providing authentication, observability, and cost management regardless of which models your teams choose.

LLM Models

Coder is agnostic to which LLM model you choose, whether it is a common model provided by major vendors or your own custom models. We support different LLM models through AI Bridge, which allows teams to configure which providers and models their AI agents and assistants use. This setup enables organizations to use LLMs within their own infrastructure while maintaining consistent governance, compliance, and security controls. Research which models you are comfortable with and configure your Coder templates to use them.

From architecture to action

Running AI agents in enterprise development environments introduces new risks such as access to sensitive code, potential data exfiltration, policy violations, or unintended changes. The above technology stack will help you address these risks by enforcing clear execution boundaries, scoping permissions per task, logging every interaction for traceability, and supporting hybrid human/agent collaboration with role-based access.

The stack you've just seen is how enterprises in financial services, defense, and high tech are running AI agents in production today. The difference between their success and the 40% of AI projects Gartner predicts will be scrapped comes down to infrastructure built for governance from day one.

Here's what changes when you adopt AI agents with Coder:

- Security teams get deterministic controls rather than policy documents hoping developers comply

- Platform engineers get consistent environments rather than snowflake setups that break in production

- Developers get AI acceleration rather than AI friction and endless security exceptions

- Leadership gets production deployment rather than stalled pilots and wasted investment

Ready to see how this AI stack works in your environment? Try out Coder and explore how Coder can strengthen your development workflows by unlocking AI tools in your enterprise.

Resources

Coder Documentation

Access Coder Workspaces

Coder Tasks

Security and Boundaries

Coder Registry

Coder Premium vs Community packaging

Subscribe to our newsletter

Want to stay up to date on all things Coder? Subscribe to our monthly newsletter and be the first to know when we release new things!