Setup

AI Bridge runs inside the Coder control plane (coderd), requiring no separate compute to deploy or scale. Once enabled, coderd runs the aibridged in-memory and brokers traffic to your configured AI providers on behalf of authenticated users.

Required:

- A Premium license with the AI Governance Add-On.

- Feature must be enabled using the server flag

- One or more providers API key(s) must be configured

Activation

You will need to enable AI Bridge explicitly:

export CODER_AIBRIDGE_ENABLED=true

coder server

# or

coder server --aibridge-enabled=true

Configure Providers

AI Bridge proxies requests to upstream LLM APIs. Configure at least one provider before exposing AI Bridge to end users.

Set the following when routing OpenAI-compatible traffic through AI Bridge:

CODER_AIBRIDGE_OPENAI_KEYor--aibridge-openai-keyCODER_AIBRIDGE_OPENAI_BASE_URLor--aibridge-openai-base-url

The default base URL (https://api.openai.com/v1/) works for the native OpenAI service. Point the base URL at your preferred OpenAI-compatible endpoint (for example, a hosted proxy or LiteLLM deployment) when needed.

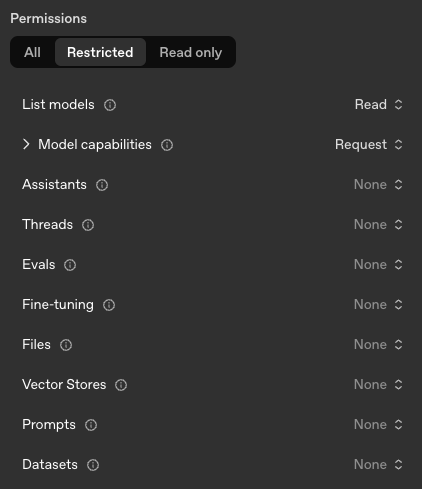

If you'd like to create an OpenAI key with minimal privileges, this is the minimum required set:

Note

See the Supported APIs section below for precise endpoint coverage and interception behavior.

Data Retention

AI Bridge records prompts, token usage, and tool invocations for auditing and monitoring purposes. By default, this data is retained for 60 days.

Configure retention using --aibridge-retention or CODER_AIBRIDGE_RETENTION:

coder server --aibridge-retention=90d

Or in YAML:

aibridge:

retention: 90d

Set to 0 to retain data indefinitely.

For duration formats, how retention works, and best practices, see the Data Retention documentation.